embellishingpresent participle of em·bel·lish (Verb)

| Verb: |

- Make

(something) more attractive by the addition of decorative details or

features: "blue silk embellished with golden embroidery".

- Make (a statement or story) more interesting or entertaining by adding extra details, esp. ones that are not true.

|

|

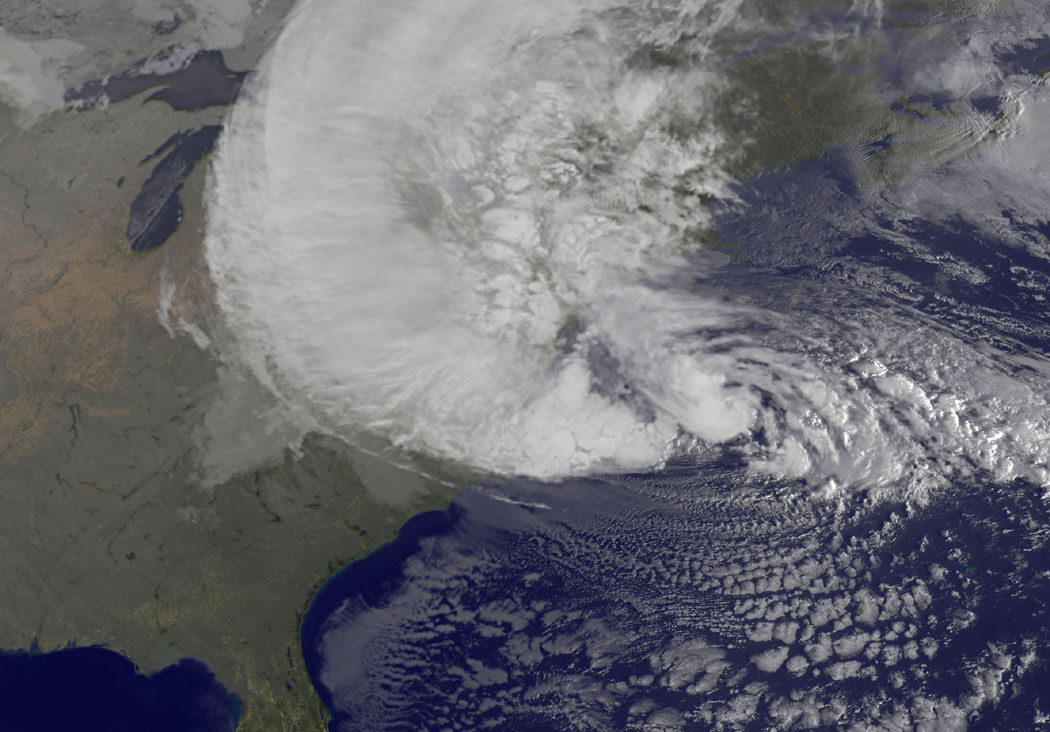

Yesterday, before heading back to the National Hurricane Center to help deal with Sandy, Chris Landsea gave a great talk here at CU on hurricanes and climate change (we'll have a video up soon). In Chris' talk he explained that he has no doubts that humans affect the climate system through the emission of greenhouse gases, and this influence may affect tropical cyclones. He then proceeded to review theory and data from recent peer-reviewed publications on the magnitude of such an influence. Chris argued that any such influence is expected to be small today, almost certainly undetectable, and that this view is

not particularly controversial among tropical cyclone climatologists. He concluded that hurricanes should not be the "poster" representing a human influence on climate.

After his talk someone in the audience asked him what is wrong with making a connection between hurricanes and climate change if it gives the general public reason for concern about climate change. Chris responded that asserting such a connection can be easily shown to be incorrect and thus risks some of the trust that the public has in scientists to play things straight.

This exchange came to mind as I came across the latest exhibit in the climate science freak show, this time in the form of

a lawsuit brought by Michael Mann, of Penn State, against the National Review Online and others for calling his work "intellectually bogus" and other mean things (the actual filing can be seen

here). I will admit that for a moment I did smile at the idea of a professor suing a critic for lying (

Hi Joe!), before my senses took back over and I rejected it as an absurd publicity stunt. But within this little tempest in a teapot is a nice example of how it is that some parts of climate science found itself off track and routinely in violation of what many people would consider basic scientific norms.

In Mann's lawsuit he characterizes himself as having been "awarded the Nobel Peace Prize." Mann's claim is what might be called an embellishment -- he has, to use the definition found at the top of this post, "made (a statement or story) more interesting or entertaining by adding extra details, esp. ones that are not true." An accurate interpretation is that the Intergovernmental Panel on Climate Change did win the 2007 Nobel Peace Prize, and the IPCC did follow that award by sending to the AR4 authors a certificate noting their contributions to the organization. So instead of being a "Nobel Peace Prize Winner" Mann was one of 2,000 or so scientists who made a contribution to an organization which won the Nobel Peace Prize.

Here we might ask, so what?

I mean really, who cares if a scientist embellishes his credentials a bit? We all know what he means by calling himself a "Nobel Peace Prize Winner," right? And really, what is an organization except for the people that make it up? Besides, climate change is important, why should we worry about such things? Doesn't this just distract from the cause of action on climate change and play right into the hands of the deniers? Really now, is this a big deal?

Well, maybe it was not a big deal last week, but with the filing of the lawsuit, the embellishment now has potential consequences in a real-world decision process.

A journalist contacted the Nobel organization and asked them if it was appropriate for Mann as an IPCC scientist to claim to be "Nobel peace prize winner." Here is what the Nobel organization said in response:

Michael Mann has never been awarded the Nobel Peace Prize.

Mann's embellishment has placed him in a situation where his claims are being countered by the Nobel organization itself. Mann's claim, rather than boosting his credibility actually risks having the opposite effect, a situation that was entirely avoidable and one which Mann brought upon himself by making the embellishment in the first place. The embellishment is only an issue because Mann has invoked it as a source of authority is a legal dispute. It would seem common sense that having such an embellishment within a complaint predicated on alleged misrepresentations may not sit well with a judge or jury.

This situation provides a nice illustration of what is wrong with a some aspects of climate science today -- a few scientists motivated by a desire to influence political debates over climate change have embellished claims,

such as related to disasters, which then risks credibility when the claims are exposed as embellishments. To make matters worse, these politically motivated scientists have fallen in with fellow travelers in the media, activist organizations and in the blogosphere who are willing not only to look past such embellishments, but to amplify them and attack those who push back. These dynamics are reinforcing and have led small but vocal parts of the climate scientific community to

deviate significantly from widely-held norms of scientific practice.

Back in 2009, Mann explained why the title of his climate book -

Dire Predictions -- was an embellishment, and this explanation helps to explain why a small part of the community thinks that such embellishments are acceptable:

Often, in our communication efforts, scientists are confronted with

critical issues of language and framing. A case in point is a book I

recently co-authored with Penn State colleague Lee Kump, called Dire

Predictions: Understanding Global Warming. The purists among my

colleagues would rightly point out that the potential future climate

changes we describe, are, technically speaking, projections rather than

predictions because the climate models are driven by hypothetical

pathways of future fossil fuel burning (i.e. conceivable but not

predicted futures). But Dire Projections doesn’t quite roll off the

tongue. And it doesn’t convey — in the common vernacular — what the

models indicate: Climate change could pose a very real threat to society

and the environment. In this case, use of the more technically

“correct” term is actually less likely to convey the key implications to

a lay audience.

So long as some climate scientists are willing to talk about their work as being "correct" in scare quotes in the context of a desire to shape public opinion, they are going to face credibility problems. Think Dick Cheney linking Al Qaeda to Saddam Hussein, and you'll understand why such efforts are not good for either science or democracy.

The late Stephen Schneider gained some fame for observing that when engaging in public debates scientists face a difficult choice between between honesty and effectiveness (as quoted in

TCF pp. 202-203):

On the one hand, as scientists we are ethically bound to the scientific

method, in effect promising to tell the truth, the whole truth, and

nothing but—which means that we must include all the doubts, the

caveats, the ifs, ands, and buts. On the other hand, we are not just

scientists but human beings as well. And like most people we’d like to

see the world a better place, which in this context translates into our

working to reduce the risk of potentially disastrous climatic change. To

do that we need to get some broad-based support, to capture the

public’s imagination. That, of course, entails getting loads of media

coverage. So we have to offer up scary scenarios, make simplified,

dramatic statements, and make little mention of any doubts we might

have. This “double ethical bind” we frequently find ourselves in cannot

be solved by any formula. Each of us has to decide what the right

balance is between being effective and being honest.

Often overlooked is what Schneider recommended about how to handle this "double ethical bind":

I hope that means being both [effective and honest].

That is a bit of advice that nowadays a few in the climate science community seem to have forgotten as they make embellishments for a cause. Such action have consequences for how the whole field is perceived -- which is comprised mostly of hard-working, honest scientists who deserve better.